I’ve lost everything and I don’t know how to get it back. How can I repair my system all I have is a usb with slax linux. I am freaking out because I had a lot of projects on their that I hadn’t pushed to github as well as my configs and rice. Is there any way to repair my system? Can I get a shell from systemd?

deleted by creator

Oh my God. Flashbacks to the first time I fucked up my Arch installation like a decade ago. This is a solid run-through of a very character-building exercise 😂

[This comment has been deleted by an automated system]

Thank you for the help but it possible to do this from slax linux? Because that is the only usb stick I have on hand.

[This comment has been deleted by an automated system]

/dev/sda1 is not a directory

[This comment has been deleted by an automated system]

How do I unmount it?

deleted by creator

Should it mount /dev/sda2/@?

[This comment has been deleted by an automated system]

It says mount point dosen’t exist

Thanks so much.

[This comment has been deleted by an automated system]

This is the best comment here.

Pretty sure pacman runs mkinitcpio by itself, but I guess a second time for good measure couldn’t hurt

[This comment has been deleted by an automated system]

I couldn’t figure out how to mount /dev/sda1 and did pacman -Syu and then I mounted it once I figured it out now pacman says there is nothing to do.

[This comment has been deleted by an automated system]

Nevermind I ran a script that looped through all packages in the output of pacman -Qk and reinstalled them.

Not an endavour/arch user, but have been in similar situation.

What I did:

- boot into live USB

- mount the problematic rootfs

- chroot to it

- run pacman update

Archwiki has a nice article on chroot

Is this work for every system? Like Fedora?

Well , except the pacman part. The chroot part should certainly work.

Thank you. Seems it’s fun to delve into. Thanks!

Yup. Mount your disk and chroot into it.

You may need to adapt the last part to your needs.

Example:

- for Fedora, you’d use dnf instead of pacman

- if your bootloader is broken, you’d want to run grub-install or grub-mkconfig

- if your initramfs doesn’t recognize your new partition, you’d want to regenerate it with the current fstab or crypttab

Boot to a liveUSB of the distro of your choice, create a chroot to your install, and then run a Pacman update from there.

Googling “Arch rescue chroot” should point you in the right direction. Good luck!

Will this work from slax linux? I am sorry if I seem like I can’t fix the issue myself seeing as you have given the resources for me to do so but what would be the exact steps to do that?

I’ve never used Slax but it should, boot the liveUSB and enter terminal.

The general process is:

- Boot to live Slax

- Mount your install

- Mount /proc, /sys, /dev

- Enter the chroot

- Check if networking is working

- Attempt to run commands in your chroot

- Exit the chroot

- Unmount everything

- Boot back to your install

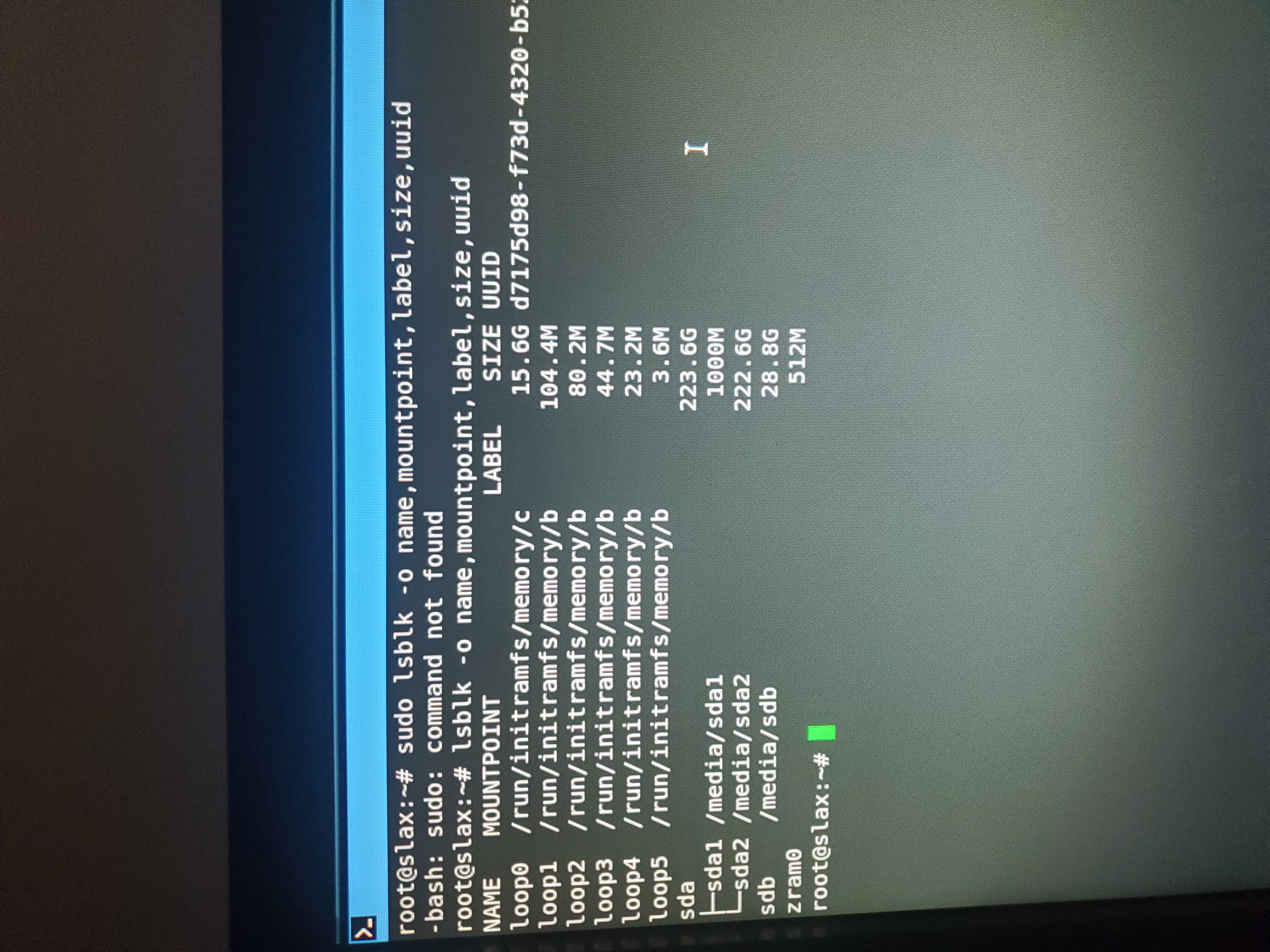

I tried chroot /media/sda2/@/root and it tells me /bin/bash not found.

If you boot to an Ubuntu iso, you can use arch-chroot to set up everything you need correctly. Done this many times when I borked my Arch boot process

https://manpages.ubuntu.com/manpages/focal/man8/arch-chroot.8.html

Can I install arch-chroot on slax? I have apt.

It should work, afaik chroot always use the binaries of the system you chrooted, so you will be able to use pacman normally. I don´t remember if chroot will mount the efi partition by default, you can do this before go to chroot (again, I’m have some memory issues but I believe that /dev does not mount as well if you just use chroot, this is why arch have arch-chroot that mounts this kind of stuff but you can mount before so it should work).

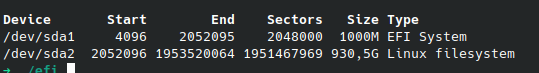

Assuming you are using systemd boot on efi partition (that is likelly if you have not changed the installer defaults), what I would do:

-

On your live CD run

sudo fdisk -lto get what is the efi partition, usually will be /dev/sdb1 since sda will be your usb, you should be able to see something like that.

-

Then you will mount your endevour partition, in your situation should be

sudo mount /dev/sdb2 /mnt/mydiskbut check your fdisk command output. -

Now you will have to mount the efi partition sudo

mount /dev/sdb1 /mnt/mydisk/efi -

Then you can use

chroot /mnt/mydisk/and proceed to do a pacman -Syu, this should trigger the post scripts that create the kernel images on the efi partition.

-

Do I just do chroot /media/sda2/@?

You have to chroot into the mount point of your root partition

This one, I did it recently when my girlfriend uninstalled python that was necessary to run the process of creation the image of your kernel in the efi partition and happened the same thing, the update process removed the old images from efi partition but was not able to copy the new.

deleted by creator

i dunno why you gotta call me out like that…

hey thanks man

for a simple solution: git + gnu stow. life changing.

something like:

~/dotfiles:i3/ .config/ i3/ config kitty/ .config/ ...stow */symlinks everything nicely :)

Why version them if you could simply have backups? I rarely want old config files…

I don’t know about you, but I tend to make lots of little changes to my setup all the time. Versioning makes it easy to roll back those individual changes, and to tell which change broke what. Sure, you could accomplish the same thing with backups, but versioning offers additional information with negligible cost.

Why not stick them in a git repo?

Why not stick them in a git repo?

Because the benefit gets lost if there are lots of autogenerated config files. Someone else said ‘stick only the files you write yourself in the repo’ and I guess that’s a better idea than to stick all of them in a repo.

Versioning is one of those things that you don’t realize you need until it’s too late. Also, commits have messages that can be used to explain why something was done, which can be useful to store info without infodumping comments into your files.

Why versioning and not backups? I get the part of commit messages, but that’s hardly worth the effort for me. If I have a config file which works, I usually keep it that way. And if it stops working, my old documentation is outdated anyway.

you just push the git repo to a remote somewhere and that’s your backup

Why do we use versioning for code instead of backups? Simple, versioning is better than backups for almost all text files, since it’s a granular backup with change messages etc.

You can ask the same question about code.

I use etckeeper to autocommit changes in /etc as git just has better and faster tools to look at the changes of a fle, compared to backup tools.

It’s just so easy to do that there hardly is any point in not doing it.

I also rarely want old versions of my code, but I still use git. A very nice feature, besides the essential backup quality, is to synchronize dotfiles between machines and merge configs together if they diverge.

I sync them with Syncthing and can also access backups of the old files. I can also merge them with merge tools and create tagged versions with git. Most of the time I don’t and I can’t think of any instance where I used git to compare an old version with a newer config file. I get why we should version code, but config files for most desktop programs are hardly worth tracking because of the frequent intransparent changes.

I only track the dotfiles which I actually write, not the generated ones. So it’s not so different from code. Desktop programs which generate intransparent config files suck. I only wish there was a good way to synchronize my Firefox using git. I know there is user.js but it all seems like a mess to me.

That’s the way. Thank you.

In my experience, it’s shockingly useful. For example, maybe I want to try out a new set of Emacs packages. I can make a branch, mess around for a bit, and even keep that configuration for a week or two. Then if I find out it’s not good, I can switch back

Been here before, but didn’t bother asking for help. Just used a liveusb to grab what I needed and reinstalled. I need to learn how to chroot …

Same, though I did try to chroot. Totally failed though. Luckily I had backups, which I learned never backed up properly.

The lessons I learned were to never trust a GUI and always make sure your backups are viable. I still have a copy of a duplicity backup form ~2020 that I hope one day to recover, if anythings in it.

To add to the other responses, after you recovered your stuff you could probably like moving to an immutable OS if you risk having power issues often, the transactions won’t be applied until everything is done so if anything happens during a transaction you’ll just remain at your last usable state

I had the same thought, but didn’t want to sound insensitive.

Saying “Your fault, using Arch for something important is a bad idea, you should have made a backup before”, while he fears all his important data is gone, would have been rude and very unhelpful.

But immutable distros solve these issues, yes. Since I switched to Silverblue I’ve never been more relaxed than ever. If something goes bad, I just select the old state and everything works, and updates never get applied incompletely like here.

I’m sorry if I sounded insensitive, it wasn’t my intention, just thought that since many others had already given a solution to the data and even OS recovery I could chip in to add something that they might find useful, if they don’t mind switching away from Arch.

I hope mine would be a reassuring suggestion more than anythingYou didn’t! :) You couldn’t have said it better, especially in your answer here!

As I said, I had the same thought as you with immutable distros like SB or Nix.

I just didn’t have much to add as an additional comment besides “Kids, this why you should always backup and maybe use an immutable distro if you can”.

As someone who values robustness and comfort, I wouldn’t touch something arch-based even with a broom-pole.

If I wanted something that’s a rolling release, I would use Tumbleweed or it’s immutable variant.

For me at least, the only pro in Arch is that you can configure everything exactly to your imagination, if I know exactly what I’m doing. And EndeavorOS is pretty much a pre-configured Arch that removes the only USP of it, the DIY-element.

I don’t see myself as competent enough to maintain my arch install, but I can access the AUR with distrobox on every other distro, like Silverblue, too, so I don’t care. The big software repository isn’t an argument for me in 2023 anymore. With distrobox my arch stuff is isolated and if something breaks, I can just forget my two installed apps and reinstall this container in 2 minutes.

It’s just an unimaginable peace of mind for me to know that if I shut down my PC today it will work perfectly tomorrow too. I’m just sick of reinstalling or fixing shit for hours every weekend. I’m too tired for that and have other responsibilities.

But yeah. My thoughts were exactly the same as yours and I didn’t have much more to add besides saying “Hey, do xy that this won’t happen anymore in the future” without sounding like Captain Hindsight from South Park. Context

You took the words right out of my mouth.

Btw that clip was hilarious, I hope I don’t come off like that often lol

It wasn’t a power issue it was me forgeting that I ran yay in the background.

It happens, that’s less of a worry then

Note that this isn’t about immutability but atomicity. Current immutable usually have that feature aswell but you don’t need immutability to achieve it.

Yeah you’re right, however searching “linux distro with atomic updates” doesn’t seem to turn up much, as you say, in most cases the two features happen to come together and the distros that have them are mostly known for the former

If nothing else, your files are all fine. You can mount your drive on a different system (like a live USB) and copy all your files.

Thank you all for offering advice. I did eventually get it working and repaired all the packages.

Other people will probably give you better answers, but I think the solution is quite easy: chroot and relauch the update.

Pretty much all the other answers are coming down to this, yeah.

There is nothing worth of freaking out in your situation. Your files shouldn’t have been impacted at all.

Boot from LiveUSB and reinstall the packages you were updating, maybe reinstall grub too.

There are tons of guides for this in the Internet, like this one: https://www.jeremymorgan.com/tutorials/linux/how-to-reinstall-boot-loader-arch-linux/

Edit: since you probably use systemd-boot, as I can see from your post, obviously the grub part of my comment shouldn’t be done. Replace those parts with systemd-boot reinstallation. Even better if pacman will update it, because there’s probably some hook already to do things manually and you won’t have to touch systemd-boot at all

Assuming you have access to a secondary computer to make a LiveUSB

-

boot a live disk/USB on your PC and copy the data you want off. Then reinstall the OS.

-

If you haven’t got a drive you can move data to, from the live OS, partition your disk and move the data to the new partition CAUTION ON PARTITIONING

-

Does Timeshift work on Arch? If so I would look into it, saved my ass a few times.

Timeshift definitely works on Arch (I use it before every update) but it isn’t going to help OP if he hasnt taken an image already

Yeah I know, that was a somewhat pretentious “backups” hint.

Boot a live Linux, chroot into your system, run pacman again and fix your systemd boot to include a fallback option for the next time this happens.

Here some pictures of the current state of my system.

Can’t help but I just did this myself. Was a fairly fresh install so I didn’t lose anything other than have to reconfigure some stuff and install some things.

Buuuuut

What happened dto me was something crashed during the update and my computer went to a black screen. So I just left it for a bit to hopefully finish even without the display. Turned the computer off and my nvme was just gone. Ended up having to get a new one.

Did systemd or grub not even show up?

Nope. I think the drive just died at a bad time honestly. I’ve had issues with it in the passed and the computer itself came from an e-scrap pile because the water pump for the CPU cooler was dead. Has worked great since swapping that out until the nvme died. Even after installing the new nvme and reinstalling EOS I couldnt see the old nvme.