The American Matthew Butterick has started a legal crusade against generative artificial intelligence (AI). In 2022, he filed the first lawsuit in the history of this field against Microsoft, one of the companies that develop these types of tools (GitHub Copilot). Today, he’s coordinating four class action lawsuits that bring together complaints filed by programmers, artists and writers.

If successful, he could force the companies responsible for applications such as ChatGPT or Midjourney to compensate thousands of creators. They may even have to retire their algorithms and retrain them with databases that don’t infringe on intellectual property rights.

Luddites smashing power looms.

It’s worth remembering that the Luddites were not against technology. They were against technology that replaced workers, without compensating them for the loss, so the owners of the technology could profit.

Their problem was that they smashed too many looms and not enough capitalists. AI training isn’t just for big corporations. We shouldn’t applaud people that put up barriers that will make it prohibitively expensive to for regular people to keep up. This will only help the rich and give corporations control over a public technology.

It should be prohibitively expensive for anyone to steal from regular people, whether it’s big companies or other regular people. I’m not more enthusiastic about the idea of people stealing from artists to create open source AIs than I am when corporations do it. For an open source AI to be worth the name, it would have to use only open source training data - ie, stuff that is in the public domain or has been specifically had an open source licence assigned to it. If the creator hasn’t said they’re okay with their content being used for AI training, then it’s not valid for use in an open source AI.

I recommend reading this article by Kit Walsh, a senior staff attorney at the EFF if you haven’t already. The EFF is a digital rights group who most recently won a historic case: border guards now need a warrant to search your phone.

People are trying to conjour up new rights to take another piece of the public’s right and access to information. To fashion themselves as new owner class. Artists and everyone else should accept that others have the same rights as they do, and they can’t now take those opportunities from other people because it’s their turn now.

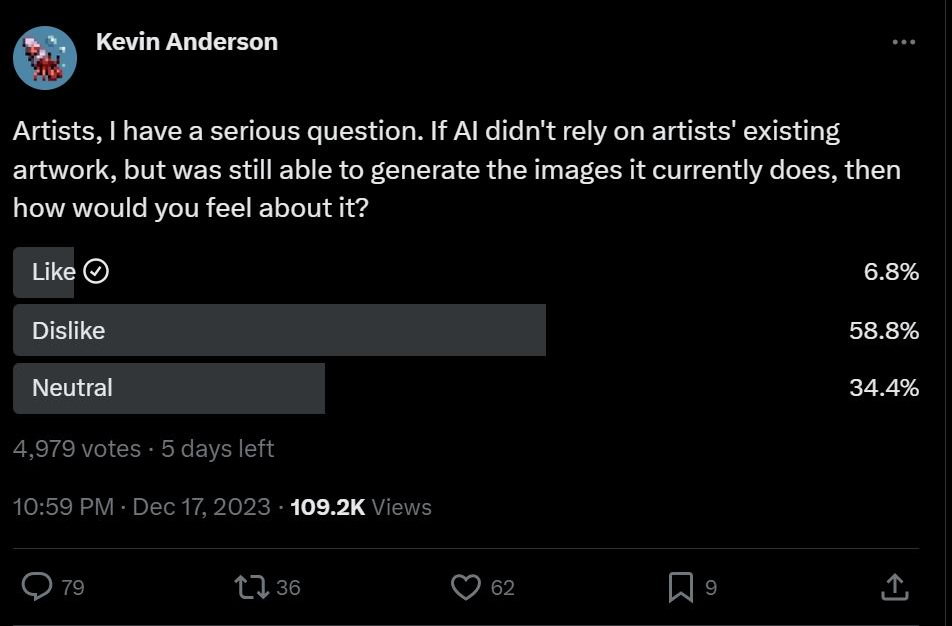

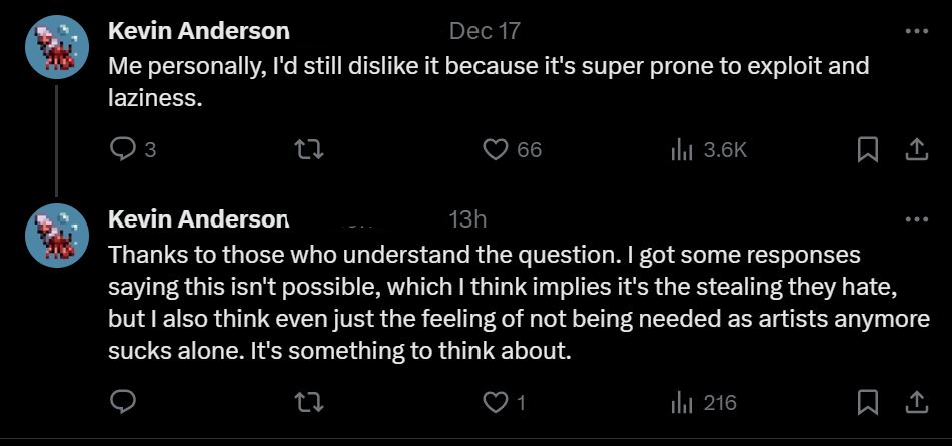

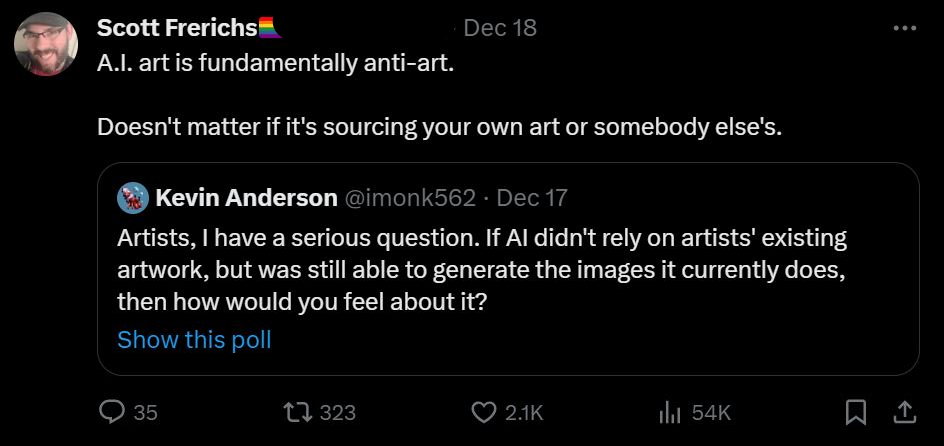

There’s already a model trained on just Creative Commons licensed data, but you don’t see them promoting it. That’s because it was not about the data, it’s an attack on their status, and when asked about generators that didn’t use their art, they came out overwhelmingly against with the same condescending and reductive takes they’ve been using this whole time.

I believe that generative art, warts and all, is a vital new form of art that is shaking things up, challenging preconceptions, and getting people angry - just like art should.

Moreover, Luddites were opposed to the replacement of independent at-home workers by oppressed factory child labourers. Much like OpenAI aims to replace creative professionals by an army of precarious poorly paid microworkers.

I don’t see the US restricting AI development. No matter what is morally right or wrong, this is strategically important, and they won’t kneecap themselves in the global competition.

Strategically important how exactly?

Great power competition / military industrial complex . AI is a pretty vague term, but practically it could be used to describe drone swarming technology, cyber warfare, etc.

LLM-based chatbot and image generators are the types of “AI” that rely on stealing people’s intellectual property. I’m struggling to see how that applies to “drone swarming technology.” The only obvious use case is in the generation of propaganda.

The only obvious use case is in the generation of propaganda.

It is indeed. I would guess that’s the game, and is already happening.

If successful, he could force the companies responsible for applications such as ChatGPT or Midjourney to compensate thousands of creators. They may even have to retire their algorithms and retrain them with databases that don’t infringe on intellectual property rights.

They will readily agree to this after having made their money and use their ill gotten gains to train a new model. The rest of us will have to go pound sand as making a new model will have been made prohibitively expensive. Good intentions, but it will only help them by pulling up the ladder behind them.

Dumbass. YouTube has single-handedly proven how broken the copyright system is and this dick wants to make it worse. There needs to be a fair-er rebalancing of how people are compensated and for how long.

What exactly that looks like I’m not sure but I do know that upholding the current system is not the answer.

I’m an artist and I can guarantee his lawsuits will accomplish jack squat for people like me. In fact, if successful, it will likely hurt artists trying to adapt to AI. Let’s be serious here, copyright doesn’t really protect artists, it’s a club for corporations to swing around to control our culture. AI isn’t the problem, capitalism is.