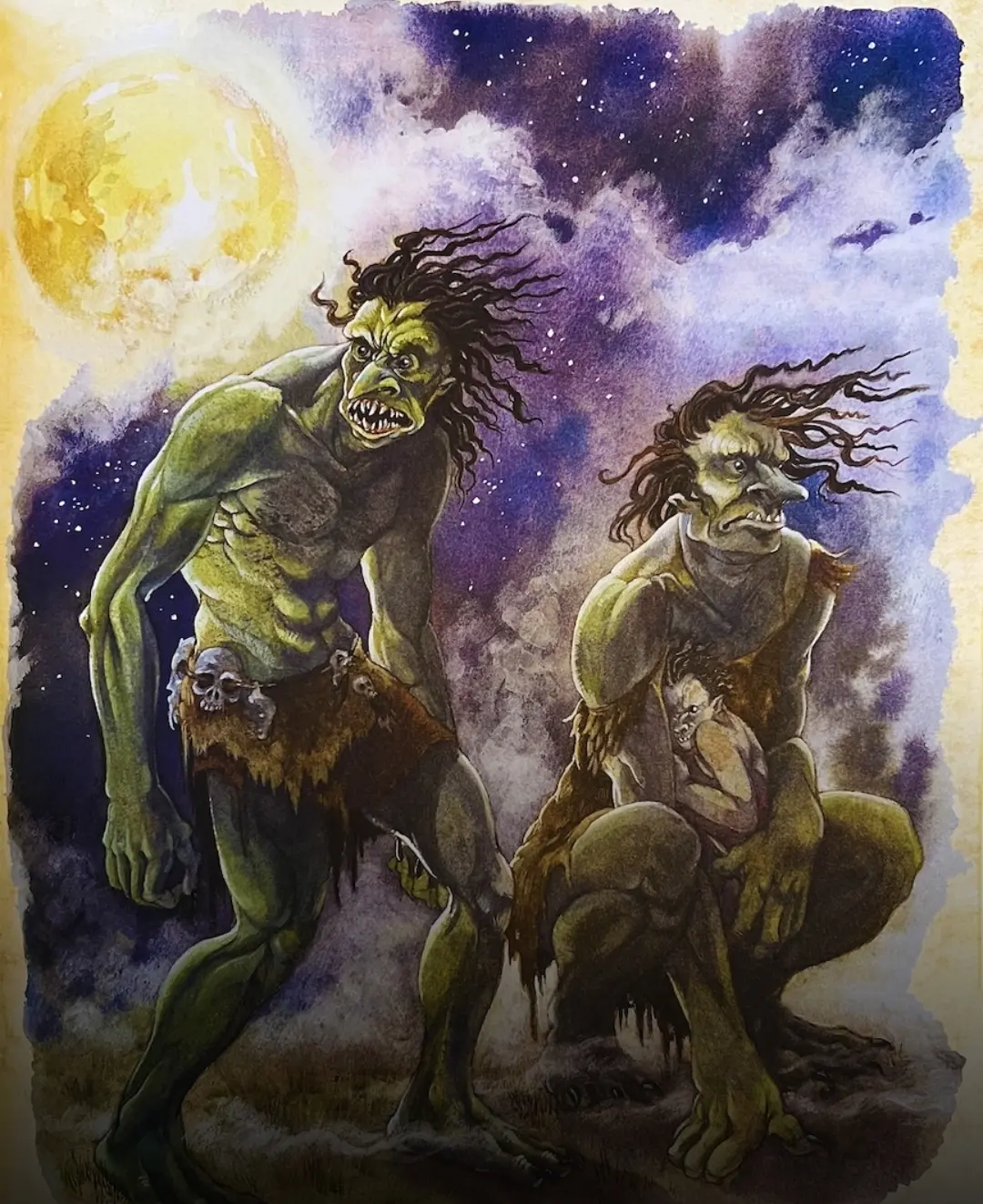

D&d also explicitly describes female trolls too. Female trolls are also described as larger and stronger than males. Here’s a happy troll family from a d&d source book, lol.

Again not saying you can’t enjoy something or use it in an rpg just because the person who wrote it may have problematic opinions or it might have some mixed history. Like I really enjoy Lovecraft, but holy crap is it a mess in terms of racist undertones because of the author.

Some more important context for people.