Try your local library.

Try your local library.

I feel lucky to have avoided this so far. It’s really not like this on my team. I write a fair bit of code and review a ton of code.

I love that proco being a pig is treated as mildly weird. His relationship with the fascist government is more important to the plot than that he is a pig. No one else is an animal. It’s just a thing that happened to him. You can tell it’s a big deal to him, but no one else really cares. You could remove him being a pig and the story still works fine. It just makes the regret and inadequatecy more obvious.

I think I like Howel’s Moving Castle more. But it’s close. That one gave me a whole author.

I think it was the EPA’s National Compute Center. I’m guessing based on location though.

When I was in highschool we toured the local EPA office. They had the most data I’ve ever seen accessible in person. Im going to guess how much.

It was a dome with a robot arm that spun around and grabbed tapes. It was 2000 so I’m guessing 100gb per tape. But my memory on the shape of the tapes isn’t good.

Looks like tapes were four inches tall. Let’s found up to six inches for housing and easier math. The dome was taller than me. Let’s go with 14 shelves.

Let’s guess a six foot shelf diameter. So, like 20 feet circumference. Tapes were maybe .8 inches a pop. With space between for robot fingers and stuff, let’s guess 240 tapes per shelf.

That comes out to about 300 terabytes. Oh. That isn’t that much these days. I mean, it’s a lot. But these days you could easily get that in spinning disks. No robot arm seek time. But with modern hardware it’d be 60 petabytes.

I’m not sure how you’d transfer it these days. A truck, presumably. But you’d probably want to transfer a copy rather than disassemble it. That sounds slow too.

Not looking at the man page, but I expect you can limit it if you want and the parser for the parameter knows about these names. If it were me it’d be one parser for byte size values and it’d work for chunk size and limit and sync interval and whatever else dd does.

Also probably limited by the size of the number tracking. I think dd reports the number of bytes copied at the end even in unlimited mode.

It’s cute. Maybe my favorite use of ai I’ve seen in a while.

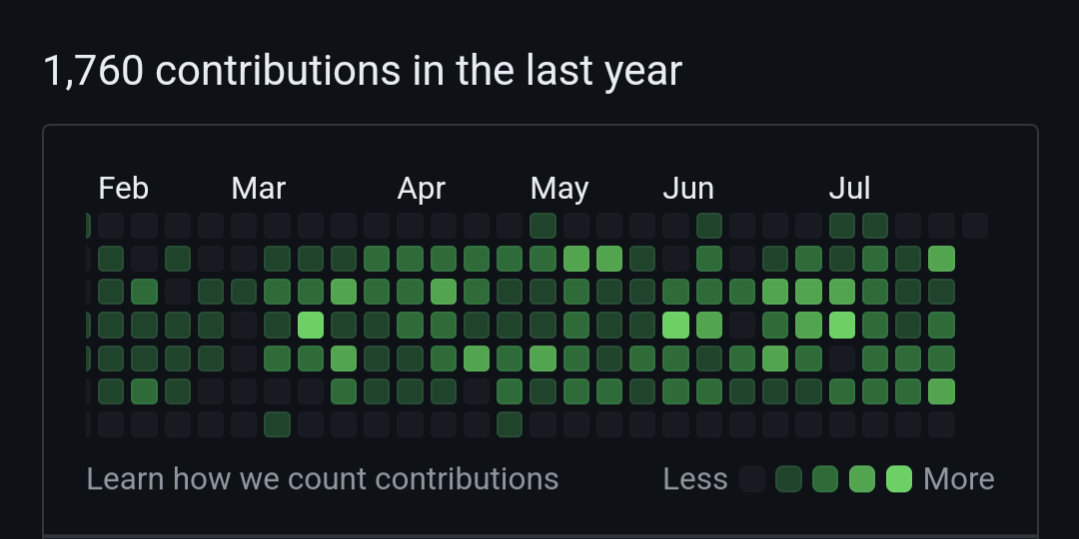

I wish it looked at contributions instead of just the profile page. Much more accurate roasting.

I work on a language for a living. It’s fun! It’s a job. But it’s fun.

I’m not super involved with the traditional language parts. The design and parsing and optimization. I spent most of my time on the runtime. We’re embedded in another big system and there’s a lot of things to make it nice.

I spent the day wiring up more profile information for the times the runtime has to go async. Then I’ll fix up some docs generation stuff. Eventually I’ll get back to fun shadowing edge case in the new syntax I’m building.

What a fun tool! It only looks at your public projects rather than your activity. I think. But it really is neat. Good use of ai. Nik approved.

Mine looks a little like that. It’s my job though. Everything’s on GitHub.

I think the technologies are pretty bubble based. We are 80/15/5 Mac/Linux/Windows and it’s been 15 years since I worked on a software team that’s thats mostly windows. But I talk to them from time to time. But if anything Mac feels underrepresented compared to my bubble.

I admit I’m probably biased in favor of believing the survey is representative. I work on one of the databases.

Speaking of databases, I don’t work on SQL Server but can see the appeal. It implements a huge array of features and it’s documentation is pretty good. Folks have told me it’s a lovely database to use.

I used gerrit and zuul a while back at a place that really didn’t want to use GitHub. It worked pretty well but it took a lot of care and maintenance to keep it all ticking along for a bunch of us.

It has a few features I loved that GitHub took years to catch up to. Not sure there’s a moral to this story.

When someone is having a computer problem I ask them to restart first. Not because I think they don’t know to do it, but just in case. Some people don’t know. Sometimes people forget. Obvious advice is useful sometimes.

Years of experience

Good for them!

Yeah! Like that!

Usually I use glob patterns for test selection.

But I did use reges yesterday to find something else. A java security file definition.

I dunno about stdx as a solution. It’s just not a big enough list.

At work we build a big java thing and we:

It’s still not enough. But it helps.

Maybe a web of trust for audited dependencies would help. This version of this repo under this hash. I could see stdx stuff being covered by the rust core folks and I’m sure some folks would pay for bigger webs. We pay employees to audit dependencies. Sharing that cost via a trusted third party or foundation or something feels eminently corporate. Maybe even possible.

Many years ago the Unicode Consortium has a fundraiser where you sponsored and emoji. Someone at my company sponsored one and posted to the internal mailing list. Short story short a couple dozen of us sponsored stuff and the company paid us back and wrote a cute blog post. Cheap marketing. Felt good.